Detecting cancer with AI

An innovative artificial intelligence (AI) application could help examine tissue samples and identify signs of cancer. PathoFusion was developed by an international collaboration led by Associate Professor Xiu Ying Wang and Professor Manuel Graeber of the University of Sydney, with support from the Australian Nuclear Science and Technology Organisation (ANSTO).

Study co-author Richard Banati, an ANSTO Professor of Medical Radiation Sciences/Medical Imaging, said, “The idea behind PathoFusion was to create a novel, advanced, deep learning model to recognise malignant features and immune response markers, independent of human intervention, and map them simultaneously in a digital image.”

A bifocal deep learning framework was designed using a convolutional neural network (ConvNet/CNN), which was originally developed for natural image classification. This deep learning algorithm can take in an input image, assign importance to various aspects/objects in the image and differentiate one from another.

The experiment to evaluate the model involved examining tissue from cases of glioblastoma, an aggressive cancer that affects the brain or spine. The team used the expert input of neuropathologists to ‘train’ the software to mark key features. The findings were published in the journal Cancers.

Experiments confirmed that the application achieved a high level of accuracy in recognising and mapping six typical neuropathological features that are markers of a malignancy. PathoFusion identified forms and structural features with a precision of 94% and sensitivity of 94.7%, and immune markers at a precision of 96.2% and sensitivity of 96.1%.

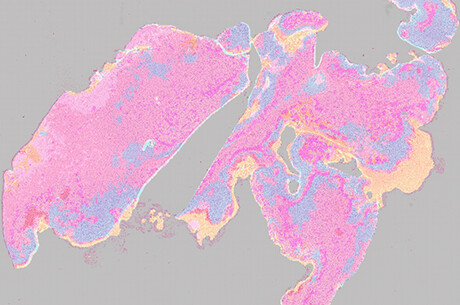

The application combines layers of information about dead or dying tissue, the proliferation of microscopic blood vessels and other vasculature with the expression of a tumour genetic marker, CD276, in an image that combines the data in a heatmap. The image uses strong colours to depict the features and their distribution. Conventional staining techniques are often monochromatic.

“The research confirmed that it is possible to train neural networks effectively using only a relatively small number of cases; that should be useful for some scenarios,” Banati said.

The research was successful in efficiently training a convolutional neural network to recognise key features in stained slides; improving the model and increasing the effectiveness of feature recognition (with fewer physical cases than conventionally needed for neural network training); and establishing a method to include immunological data.

Webinar: Turning data protection into a business advantage

Backup is no longer a safety net, but a strategic tool for risk reduction — and the...

Report explores Australian perceptions of Medtech access

A nationally representative survey conducted from August–September 2025 has explored...

Updated guidance for telehealth and virtual care now available

Reflecting concerns around unethical practice and emerging business models focused more on profit...

![[New Zealand] Transform from Security Awareness to a Security Culture: A Vital Shift for SMB Healthcare — Webinar](https://d1v1e13ebw3o15.cloudfront.net/data/89856/wfmedia_thumb/..jpg)

![[Australia] Transform from Security Awareness to a Security Culture: A Vital Shift for SMB Healthcare — Webinar](https://d1v1e13ebw3o15.cloudfront.net/data/89855/wfmedia_thumb/..jpg)